A meta-analysis has determined that the fracture detection performance of artificial intelligence systems is broadly equivalent to clinicians

The fracture detection rate of artificial intelligence (AI) systems and clinicians are comparable according to the findings of a meta-analysis by researchers from the Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, Oxford, UK.

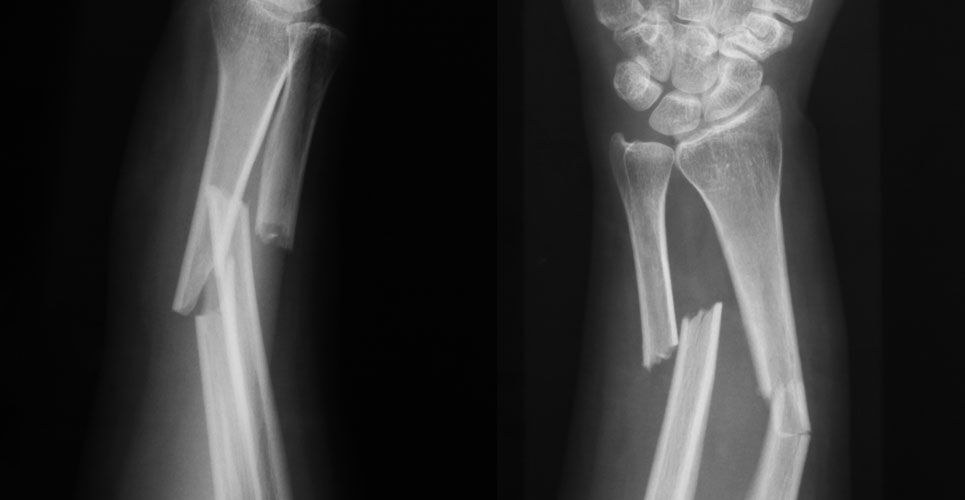

Fractures are a common reason for admission to hospital around the world though fortunately, some evidence suggests that rates have stabilised. For instance, one 2019 UK-based study observed that the risk of admission for a fracture between 2004 and 2014 was 47.8 per 10,000 population but that the rate of fracture admission remained stable. Nevertheless and perhaps of more concern, is that fractures are not always initialled detected. This was highlighted in a two-year study in which 1% of all visits resulted in an error in fracture diagnosis and 3.1% of all fractures were not diagnosed at the initial visit. One possible solution to improve the diagnostic accuracy of fractures is to make better use of artificial intelligence systems and in particular, machine learning, which enables algorithms to learn from data. Within the realms of machine learning, is deep learning, which is a more sophisticated approach to machine learning that utilises complex, multi-layered “deep neural networks. The use of deep learning algorithms has great potential for the detection of fractures and in a 2020 review, the authors concluded that deep learning was reliable in fracture diagnosis and had a high diagnostic accuracy.

For the present meta-analysis, the Oxford team assessed and compared the diagnostic performance of AI and clinicians on both radiographs and computed tomography (CT) images in fracture detection. The team searched for studies that developed and or validated a deep learning algorithm for fracture detection and assessed AI vs clinician performance during both internal and external validation. The team analysed receiver operating characteristic curves to determine both sensitivity and specificity.

Fracture detection rates of AI and clinicians

A total of 42 studies with a median number of 1169 participants were included, 37 of which included fractures detected on radiographs and 5 with CT. A total of 16 studies compared the performance of the AI against expert clinicians, 7 to experts and non-experts and one compared AI to non-experts.

When evaluating AI and clinician performance in studies of internal validation, the pooled sensitivity was 92% (95%CI 88 – 94%) for AI and 91% (95% CI 85 – 95%) for clinicians. The pooled specificity values were also broadly similar with a value of 91% of AI and 92% for clinicians.

For studies looking at external validation, the pooled sensitivity for AI was 91% (95% CI 84 – 95%) and 94% (95% CI 90 – 96%) for clinicians on matched sets. The specificity was slightly lower for AI compared to clinicians (91% vs 94%).

The authors concluded that AI and clinicians had comparable reported diagnostic performance in fracture detection and suggested that AI technology has promise as a diagnostic adjunct in future clinical practice.

Citation

Kuo RYL et al. Artificial Intelligence in Fracture Detection: A Systematic Review and Meta-Analysis Radiology 2022